1. The gold standard book on Bitcoin

The Bitcoin Standard: The Decentralized Alternative to Central Banking (2018) by Saifedean Ammous is the gold standard book on Bitcoin. Instead of viewing Bitcoin news in terms of days, week, or even years, it views Bitcoin in the perspective of centuries of monetary history.

In considering this book, I revisited my own economic and legal-theory analyses of Bitcoin, which for the most part cover harmonious, though distinct, territory. The result is a review essay, part book review and part in-depth discussion. A follow-up paper is planned to expand on some of the issues further, particularly where I have now reframed a perspective suggested in my previous writings.

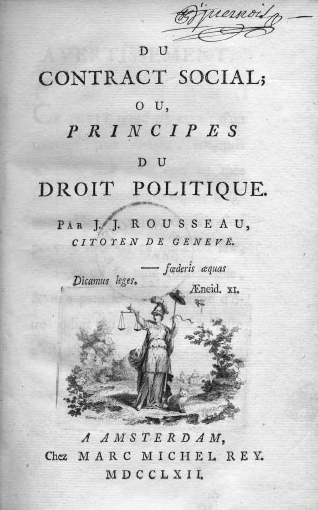

In an unusual, but I think effective, editorial choice, the book’s first 60% is not about Bitcoin, but instead provides essential theoretical and historical background for grasping the scale of Bitcoin’s significance. It walks through the theory and history of money and proto-money collectibles, particularly informed by the Austrian school of economics. A central theme is the role of sound money versus inflationary money in the evolution of societies and cultures, and the wealth and poverty of nations.

Austrian school approaches, in which I had been immersed for years prior to encountering Bitcoin, were the launching point for my writings on the subject, which appeared primarily from 2013–2015 (assembled on my Bitcoin Theory page). For those who do not yet have such a perspective on money—alas, the vast majority—Ammous brings them up to speed in admirable fashion while including details and formulations likely to be useful to veterans as well.

What I immediately considered most intriguing about Bitcoin was its pre-determined monetary policy for an asymptotically declining inflation rate, eventually terminating at zero. It is just such recognition of the centrality of monetary policy to Bitcoin’s importance that Ammous conveys throughout.

I assumed that such should likewise have been apparent to others versed in the Austrian school. That it was not, and that Bitcoin was the target of attacks from hard money advocates, became the launching point for my research. Inflationists and money cranks would obviously hate Bitcoin, but what was preventing so many of those with a pro-hard-money perspective from seeing its potential to become a sound money?

I re-examined economic concepts as grounded in the theory of action in the Austrian tradition (praxeology), such as goods, commodity, scarcity, and rivalness, as well as Mises’s regression theorem and Menger’s evolutionary account of monetary emergence through relative liquidity. I argued that each concept and formulation can be applied independently of any need for a material “base” for the goods in question.

Moreover, Austrian-school accounts of the origins and functions of money could be applied to interpret the historical data on Bitcoin’s early evolution. Bitcoin’s history formed a new free-market monetary origin story, one not hidden by the mists of time, but plainly documented over the course of 2009–2011, as discussed in my late-2013 monograph, On the Origins of Bitcoin: Stages of Monetary Evolution (PDF).

It seemed that historical associations of sound money with material backing were keeping some sound-money advocates from seeing that bitcoin could be a commodity and a hard monetary commodity at that. A commodity contrasts with a more specialized good and is characterized by the full interchangeability of products from different producers. In this case, the producers in question are Bitcoin miners and the coins they produce are fungible rather than distinguished or specialized (see my “Commodity, scarcity, and monetary value theory in light of Bitcoin,” [PDF] (Prices & Markets, 20 Oct 2015)).

The economic meaning of hard is, as Ammous explains, difficult to produce more units of in response to increases in demand (5). What is it that makes a monetary unit resistant to production growth? There are many possibilities. Having a particular chemical composition is but one.

Bitcoin’s inflation-resistance rests on a novel basis, as described in my late-2014 article, “Bitcoin: Magic, fraud, or ‘sufficiently advanced technology’? Yet most Bitcoin critics were not beginning to grasp the layered technical underpinnings of this. They assumed that bitcoins, as digital objects, must be copiable and therefore unreliable. Yet what all the fuss was about was precisely that bitcoin units were the first digital objects that are not copiable in this way. Quite the contrary, they constitute a new class of scarcity, never before seen, which Ammous labels absolute scarcity (177).

Ammous emphasizes stock/flow ratio as a practical comparative measure of monetary hardness (5–6). As demand to hold a unit rises, can its production be profitably increased? And if so, by how much? It is quite difficult to expand production of gold in response to an increase in demand for it. Moreover, since gold is effectively indestructible, its stock has risen over the centuries relative to the flow of annual mine production. This explains gold’s unique superiority as a monetary asset, even to this day.

Yet Bitcoin is an entirely new development in this regard. Its unit production cannot be expanded at all in response to increased demand. Due to its difficulty-adjustment algorithm, the more processing power comes on line, the more is required to extract new coin. This keeps unit production on schedule no matter how many resources are thrown at accelerating it.

Nevertheless, this growing distributed processing power is not wasted; it increases the network’s security by steadily raising the costs of attack (173). The energy and investment that might have been channeled into socially destructive inflation is channeled instead into increased network security, which feeds back into further inflation-resistance. As the unit-production schedule unfolds, bitcoin will in a few short years surpass, and then far exceed, gold at the top of the stock/flow ratio charts (198–99), making it the hardest monetary commodity ever known.

Cash, Ammous, argues, formerly meant not only a tradable bearer instrument but, during the classical gold standard era, a final means of settlement. It referred to physical metal (238). The modern sense of cash as pocket money has misled many into believing that bitcoin, in order to serve as digital “cash,” must be usable for everyday transactions on chain. Instead, Ammous argues that for both technical and economic reasons, on-chain bitcoin’s more potent natural role may well be as a means of settlement, largely, though not exclusively, to underpin far more efficient systems built on it. Such an arrangement would be in keeping with this older sense of the word cash.

Ammous breaks down Carl Menger’s concept of salability, central to the latter’s evolutionary account of monetary emergence, into three components: salability across space, time, and scale. He finds that Bitcoin excels in each area:

With its supply growth rate dropping below that of gold by the year 2025, Bitcoin has the supply restrictions that could make it have considerable demand as a store of value; in other words, it can have salability across time. Its digital nature that makes it easy to safely send worldwide makes it salable in space in a way never seen with other forms of money, while its divisibility into 100,000,000 satoshis makes it salable in scale. (181)

Ammous challenges the popular notion that “blockchain technology” is likely to be useful for much else than decentralized digital cash, that is, Bitcoin (257–72). He examines the “other use cases” advertised for altcoins (non-bitcoin cryptocurrencies) and finds that each suffers from a similar problem—centralized and conventional database methods can or could do most or all of these things more efficiently and at less cost than a block chain. A decentralized block chain is a burdensome and costly design. What it produces must be valuable and unique enough to justify its costs.

With Bitcoin, the block chain arrived as the unexpected solution to a well-defined problem. Many “other use cases” are solutions looking for problems—not to mention windfalls, venture capital, research grants, or social tracking and control leverage. Some useful applications may emerge for private and centralized blockchain-like structures, such as for internal cross border transfers, as some large banks have already begun using, but this is entirely different from decentralized digital cash on a public permissionless network, suitable for use with complete stranger counterparties.

2. From monetary policy to immutability

Parts of Chapter 10 (217–274) highlight Bitcoin’s incentives for different types of participants, such as the importance of decentralized full node operators independently choosing which software to run, the desire of developers to offer software that will be used, and the incentives for miners to stay on the dominant network. The theme here is that much of the Bitcoin network’s worth, in light of its valuable fixed monetary policy, lies in resistance to consensus-rule changes. Any change requiring a backward-incompatible hard fork to the network must attract sufficient support among relevant parties that enough of them switch in a timely way; otherwise the network could split into incompatible chains.

Cryptocurrencies created after Bitcoin, Ammous argues, suffer here from the outsized presence of founding development teams or other identifiable backers.

Without active management by a team of developers and marketers, no digital currency will attract any attention or capital in a sea of 1,000+ currencies. But with active management, development, and marketing by a team, the currency cannot credibly demonstrate that it is not controlled by these individuals. With a group of developers in control of the majority of coins, processing power, and coding expertise, the currency is practically a centralized currency where the interests of the team dictate its development path. (254)

Today, this even begins to include mega-corporations and states mulling their own initiatives to mimic some of Bitcoin’s peripheral characteristics while omitting its most significant and critical ones. Such projects see the attractions of the cryptocurrency revolution squarely in payment ease or transfer convenience and not in the prospect of a new monetary asset with unprecedented hardness.

Any group of founders and backers, whether states, corporations, or founding teams can lead or promote hard-fork alterations (254–55). For Bitcoin alone, the only corresponding founding figure was always anonymous and is now long absent, having withdrawn in 2010 and never heard from since (251–52). Bitcoin’s large number of software development contributors, node operators, and miners are organizationally and jurisdictionally independent, located worldwide.

This is a key part of Ammous’s central argument with regard to Bitcoin; its value lies in its immutability, meaning that no party is in a position to change its consensus rules (222–27).

The reason that even seemingly innocuous changes to the protocol are extremely hard to carry out is the distributed nature of the network, and the need for many disparate and adversarial parties to agree to changes whose impact they cannot fully understand, while the safety and tried-and-tested familiarity of the status quo remains fully familiar and dependable. Bitcoin’s status quo can be understood as a stable Schelling point, which provides a useful incentive for all participants to stick to it, while the move away from it will always involve a significant risk of loss. (225)

Consensus rule fixity became a bone of contention, most prominently around 2014–2017, amid debate on a series of proposals to increase Bitcoin’s 1MB block size limit. This limit restricts the amount of transaction data that can be added to the chain per block (in effect, per time period, since a new block appears on average every 10 minutes). Like the monetary policy, this is a consensus rule that can only be changed through a backward-incompatible hard fork. Each proposal failed to attract the necessary support. Disputants differed not only on opinions about the height of the limit itself, but also the wisdom and necessity of a hard fork, which is needed to change it (and speaking of Shelling points, Change it to what, exactly? There were many competing proposals).

A major argument in favor of the existing block size limit is that a significant growth in block sizes would raise the cost of operating a full node, reducing their number and making the network more vulnerable to collusion or attack. The more limited the network requirements remain, the more easily wholly independent nodes can operate in separate, unique locations. In total, an estimated 9,500 nodes—including independents along with cloud instances and institutional nodes, are reachable as of this writing. By country, the US and Germany lead, together contributing 25% and 20%, respectively.

The prevailing view in the Bitcoin community is that if the block size limit, by preserving more rather than fewer nodes, tips the balance toward added marginal catastrophe insurance for the system while still keeping it running well enough, this must be given more weight than any non-critical increase in data throughput. This is even more so when other methods exist or are in development for increasing on-chain transaction throughput via increased efficiency and transactional density. The latter refers to any method for squeezing more transactions into the same data (for current examples, see “Taproot-Schnorr Soft Fork” (17 Aug 2019) by Mike Schmidt). Such density-seeking strategies do not entail the trade-off between transaction volume and node burden that a simple increase in data capacity does. Besides increasing on-chain or “Layer 1” density, many options exist for moving transactions off-chain to various “Layer 2” venues. The old saying, “If it ain’t broke, don’t fix it” applies.

And it is here where the precise meanings attached to ‘running well enough’ or ‘ain’t’ broke’ become critical. On this, a conflict of visions came into focus between a future of using the main chain directly as a large-scale payment system (Visa and PayPal competitor) and one of using it as a sound money system (dollar and gold competitor). Each vision suggests different priorities. A transaction capacity deemed suitable to support a digital-gold vision (ain’t broke) may be deemed insufficient to support a mass-payment-system vision (is broke). And higher capacity for the mass-payment-system vision (fixed it) implies lower security for the digital-gold vision (could break it). If these visions are indeed incompatible, which ought to take priority?

Ammous offers compelling arguments for the digital-gold vision and against the mass payments vision. Among these:

Current state-of-the-art technology in payment settlements has already introduced a wide array of options for settling small-scale payments with very little cost. It is likely that Bitcoin’s advantage lies not in competing with these payments for small amounts and over short distances; Bitcoin’s advantage, rather, is that by bringing the finality of cash settlement to the digital world, it has created the fastest method of final settlement of large payments across long distances and national borders. It is when compared to these payments that Bitcoin’s advantages appear most significant. (207)

The invention of Bitcoin has created, from the ground up, a new independent alternative mechanism for international settlement that does not rely on any intermediary and can operate entirely separate from the existing financial infrastructure. (205)

Ammous argues that Bitcoin’s status quo of economic policies, block size limit included, is ideal because it helps protect the most important value for bitcoin as digital gold, the change-resistance of its monetary policy. The functions of higher-volume transacting can be covered through other means. Even if cryptographic substitutes did not gain widespread traction, more traditional banking models could fill the gap.

Bitcoin can be seen as the new emerging reserve currency for online transactions, where the online equivalent of banks will issue Bitcoin-backed tokens to users while keeping their hoard of Bitcoins in cold storage, with each individual being able to audit in real time the holdings of the intermediary, and with online verification and reputation systems able to verify that no inflation is taking place. (206)

The block size limit and the bitcoin unit production schedule must nevertheless still be viewed as distinct phenomena in monetary-theory terms. Placing them together under an argument for the immutability of all of Bitcoin’s consensus rules does not remove this distinction.

Ammous correctly explains that the production of new bitcoin units, as an example of the production of money units, is quite unlike the production of consumer and producer goods and services. Any number of money units, provided sufficiently divisible, will do equally well for a society of money users. That pumping out ever more money units is not better, and is indeed far worse, for a society of money users as a whole is a central insight of the Austrian approach to money.

However, regarding the height of the block size limit, the immediate issue is not the number of money units produced, but the number of transactions that miners can elect to include in a candidate block. Unlike producing more money units, this is a productive service performed in exchange for specific payment. I have described this as the market for on-chain transaction-inclusion services. This exists in concert with a non-market for verification & relay services, which are only compensated indirectly, having no direct pricing mechanism.

In sum, monetary theory, for its part, hands Bitcoin a single-case special justification for having an arbitrary economic limit fixed in code, and this applies to its monetary policy only. This justification derives from a unique peculiarity of money as an economic good, and does not extend, at least not directly, to any other arbitrary economic limit, such as the block size limit.

One can conclude that the block size limit may be defensible on other grounds, but it is not as unmistakably defensible as the unit-supply schedule itself. Ammous spends the bulk of the book setting up his defense of Bitcoin’s supply schedule in particular (arguably the first 70% (Chaps 1–8)), and then in the latter part shifts to a supportive explanation of the all-inclusive inalterability of all of Bitcoin’s consensus rules (222–30), not just that of its money supply rules.

This could be tempered with a finer-grained recognition that other consensus rules do not enjoy the same degree of air-tight justifiability (from a pro-hard-money standpoint, at least) as the money supply rules in particular. This does not make these other rules indefensible, but it does show that that supporting them stands on looser, more derivative ground.

3. The primacy of sound money over permissionless transacting

Besides Bitcoin’s monetary policy, another of its main attractions is disintermediation, or in the famous phrase from the Bitcoin white paper, the elimination of “trusted third parties.” Bitcoin can be used to transfer value over arbitrary distance without contracting with an intermediary service. It is cash-over-internet.

Some early enthusiasts and promoters seemed to view Bitcoin’s leading contribution as freeing the people for permissionless transacting. A trusted third party is in a position to refuse service based on identity or purpose, reflecting internal corporate policies or jurisdictional prohibitions. Early in Bitcoin’s history, the promise of permissionless transacting fueled the rise of Silk Road and later other Bitcoin-mediated prohibition-resistance marketplaces.

One critique of the digital gold vision for Bitcoin is that increased reliance on off-chain Layer 2 services could recapitulate old-school intermediation, bringing back trusted third parties in new hats, and standing between most ordinary users and Bitcoin’s promise of disintermediation. Instead of end users holding bitcoin (historically, gold coins), they will be limited to using bitcoin substitutes for the most part (historically, paper notes and deposit entries), which could then be inflated far more freely.

However, intermediation in Bitcoin is not of the “standing between” type since nothing forbids end users from employing Layer 1 themselves, either directly or as a means of auditing Layer 2 services, the latter completely unprecedented in the gold case. Moreover, unlike historical gold-based currencies, Bitcoin has no favored legal status. Layer 2 options can only attract users if they provide some service that these users prefer. For example, certain Layer 2 bitcoin substitutes could come to offer privacy, speed, cost, and other advantages over Layer 1 bitcoin. Layer 2 units could overcome their own drawbacks (principally, that of not being on-chain bitcoin) by offering counterbalancing values that give them a net advantage for various applications. In the case of cryptographic substitutes, they can form a direct link to specific on-chain bitcoin units, making their “backing” specific rather than pooled, and therefore easier to audit.

Intermediation as such can be a natural outcome of the hierarchical structure of economic specialization and the division of labor. It is most likely unobjectionable provided that it occurs within a voluntary context, which can make it a benefit rather than uninvited meddling. The natural, emergent hierarchies and structures of the voluntary sector must be carefully distinguished from the compulsory-sector hierarchies that the state nurtures and sustains through force and threats.

Using on-chain bitcoin directly remains permissionless in that the network is open to any node running compatible software. The significance of eliminating trusted third parties persists in that any party can join Layer 1 and interact directly with any other party on it without permission. All that is required—from the standpoint of Bitcoin itself—is suitable hardware, consensus-compatible software, and network connectivity, no permission slips.

However, this in no way implies a certain costlessness of joining the network, and it does not mean that Layer 1 must remain suited to any imagined use at any wished-for cost level. If eventually the typical Layer 1 user were a Layer 2 intermediary or financial institution, this would have been the outcome of a voluntary-sector evolution toward improved performance at global scale. Bitcoin would still be providing a non-inflationary monetary base open to direct access by anyone who valued it sufficiently to join the network. Open entry is not the same as costless entry. And from a market-oriented perspective, it is openness of entry that is critical to competitive health.

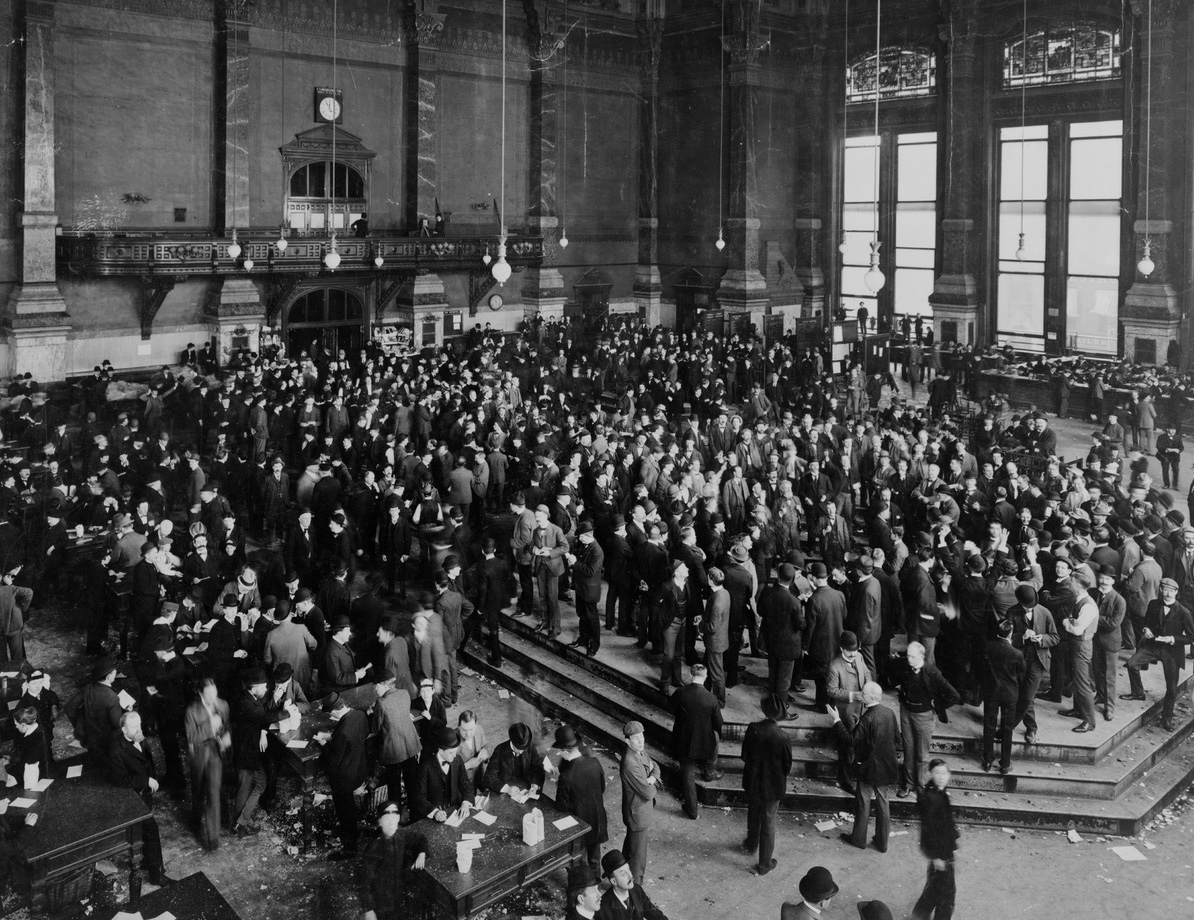

This contrasts with the current money and banking system built on nationally and internationally managed fiat money, created and maintained to facilitate the inflation- and debt-financing of the interventionist state and its long follower-train of profiting cronies. Unlike Bitcoin, which is open to all entrants, direct participation in key roles in the conventional money and banking system is restricted to vetted cartel members and well-trained, paid sympathizers.

But a closed system built from the ground up to run on rotten money cannot be fundamentally reformed. An open system built on sound money is capable of reforming itself through continuous improvement in the context of free competition.

Bitcoin could represent a “decentralized alternative to central banking” in that any individual or institution can join without a cartel membership and begin to interact with any other party on the network regardless of geographic location or political jurisdiction. The size and composition of such parties and the uses to which they put Layer 1, for direct use or service provision, would naturally evolve with time and social progress. However, bitcoin’s monetary hardness and direct auditability makes it an unsuitable base for the inflation-pyramiding schemes of conventional banking as we know it.

It is important to recall that banking as such is not inherently corrupt; instead, it is corrupt in that it is operated as a state-orchestrated cartel running on unsound money. Key services that banking offers are something that people want to use.

In the midst of the very common anti-bank rhetoric that is popular these days, particularly in Bitcoin circles, it is easy to forget that deposit banking is a legitimate business which people have demanded for hundreds of years around the world. People have happily paid to have their money stored safely so they only need to carry a small amount on them and face little risk of loss. (237)

Between Bitcoin’s two features of providing sound money and permissionless transacting, the first is of far greater significance, the second more a functional support. Engagement in mutually consensual commercial association in the face of unjust restrictions offers an annoyance to the existing system of rule, but one that is addressable through forensic procedures and totalitarian justice, as several pioneers of bitcoin-mediated prohibition-resistance marketplaces have discovered to their detriment.

Bitcoin has also been touted more generally for its always-on service and borderless convenience for payments and transfers. However, conventional financial systems and services, awakened from incumbent slumber by upstart cryptocurrency competition, can readily improve the speed, availability, and pricing of their own online payment and transfer offerings, and have been doing so.

In contrast, Bitcoin’s unique and durable competitive advantage lies elsewhere—in its unprecedented monetary hardness. A paradigm shift away from fiat-money mediated, politically controlled central banking is of wider-reaching potential impact than either individual permissionless transacting or added convenience. It is a path beyond major systemic drawbacks of the modern nation-state system. The steady heat of a hard money alternative could gradually evaporate the conventional system’s corrupt lifeblood—its ever-depreciating fiat money—the shadowy chief financier of its socially destructive inflation- and debt-ridden practices.

As a bonus, over the longer-term, such a monetary revolution could also aid in getting beyond the conventional system’s primitive meddling in mutually consensual matters, a major driver of interest in permissionless transacting to begin with. In Thoreau’s formulation, “there are a thousand hacking at the branches of evil to one who is striking at the root.” Individual-level permissionless transacting can ultimately only hack at the branches of the modern state’s evils whereas the mere existence of a sound money alternative strikes at their root.

4. As Bitcoin gradually eats the world of monetary assets…

The Bitcoin Standard makes the case that Bitcoin is not only analogous to the classical gold standard, but in important respects has at least the theoretical potential to be superior to it. For those already versed in hard-money-oriented Austrian-school approaches, as well as those brought up to speed by reading this book, the enormity of this potential contribution to society will stand out. Bitcoin could in the longer term come to fill the most neglected niche of all, sound base money, the production rate of which cannot be increased by any party—private or public, individual or institutional.

Bitcoin can be understood as a sovereign piece of code, because there is no authority outside of it that can control its behavior. Only Bitcoin’s rules control Bitcoin, and the possibility of changing these rules in any substantive way has become extremely impractical as the status-quo bias continues to shape the incentives of everyone involved in the project. (253)

In light of common warnings about how risky Bitcoin is, that it is unproven, that its market price is volatile, that managing it requires specialized knowledge and practice and that access to its units can be lost, there could also be risks to shunning it altogether. What if it succeeds?

Ammous recounts episodes when a money with a superior stock/flow ratio has driven out a money with a lesser one. This includes gold driving out silver (31–33), as well as several more obscure historical cases (16). Each time, those left holding the demonetizing assets—in some cases specific central banks and residents of the corresponding countries—have suffered steady, serious, and permanent wealth losses.

Ammous estimates that, “around the year 2022, Bitcoin’s stock-to-flow ratio will overtake that of gold, and by 2025, it will be around double that of gold and continue to increase quickly into the future while that of gold stays roughly the same (199).” The very strongest of the fiat currencies, the Japanese yen and the Swiss franc, equaled Bitcoin’s stock/flow ratio back in 2017 (198), but Bitcoin has by now surpassed them.

If Bitcoin’s relative stock/flow ratio does indeed help enable it to eat the world of monetary assets, it can take its time enjoying the meal. Major transitions would take time, with twists and turns along the way, and catastrophic risks can never be wholly eliminated. Since this is an all-voluntary system, however, transitions can only proceed along opt-in paths, in which each individual and institution decides at the margins that its next step is likely to be in its own interests.

In the meantime, anyone who has not read The Bitcoin Standard should do so. The highlights above can only indicate some of the key outcomes of a detailed, well-supported presentation. Beginners will be brought up to speed in an engaging fashion, while even those already well-versed in both Austrian economics and Bitcoin are likely to come away with both new details and an integrated, readable narrative that never loses sight of that which is most important and remarkable about Bitcoin, its potential to become a hard monetary unit in a soft age of inflation.